What's new

Comparing results

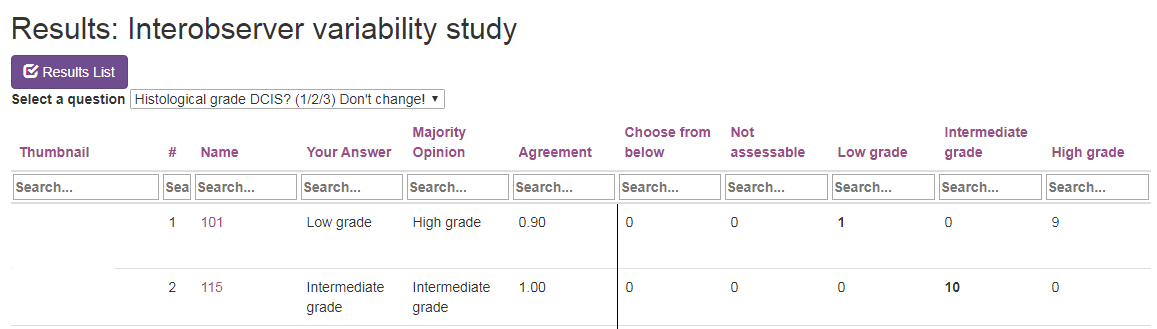

Slide Score has been used for studies assessing observer variability - multiple (10 or more) pathologists score the same set of slides and their scores are compared. Various statistical tools (Cohen's kappa or Fleiss' kappa) are used to draw conclusions. The questions (or slides) with the most (or least) agreement between different pathologists are often interesting. These comparisons can also serve as a feedback for the individual pathologist, for example: you've scored these slides as low grade but everyone else considers them high grade.

Slide Score includes such a results comparison view and now it's available to all studies - you just need to enable it in the study administration settings. It displays per answer for each slide your answer compared to the majority opinion and how many pathologists scored that slide with each of the options in the answer. You can sort by agreement - what percentage of pathologists selected the majority opinion answer.

We hope this feature will help understand some of the interobserver variability!